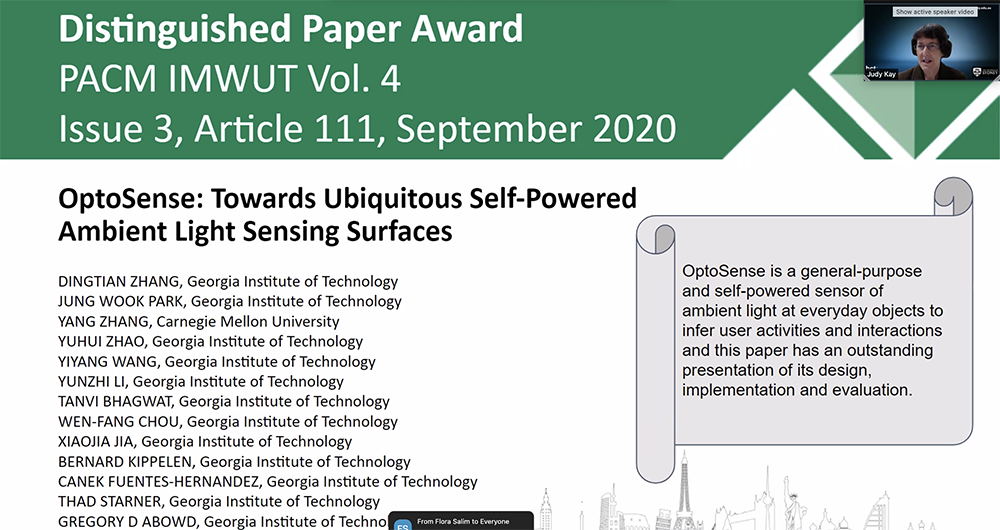

Tingyu Cheng, Bu Li, Yang Zhang, Yunzhi Li, Charles Ramey, Eui Min Jung, Yepu Cui, Sai Ganesh Swaminathan, Youngwook Do, Manos Tentzeris, Gregory D. Abowd, HyunJoo Oh (IMWUT 2021)

We create sensing technologies for next-generation computing devices to perceive users and their environments. We envision a future where physical AI systems will actively adapt to our daily tasks in a practical, inclusive, and sustainable manner. To achieve this vision, we 1) advance sensing techniques that recognize events and user activities, 2) empower emerging computing devices with efficient and fluid interactions, and 3) engineer smart systems that harness user interaction as a source of power.

We are actively looking for students at all levels. If you are interested in working with us, please first read the Faq section. If you want to join the lab as a PhD student, please apply the PhD program at UCLA.

Yes, HiLab is taking new students! You should apply to the ECE department and give a heads up to Yang. In general, we look for students interested in Human-Computer Interaction research with technical backgrounds. Since the graduate application process at UCLA is highly competitive, we encourage you to reach out and discuss your application with Yang beforehand. Reaching out to us and working with us is always a great way to initiate collaborations -- if you are interested in our research, we will most likely be working in the same research field, and the chance is good that and we will be your future collaborators, colleagues, thesis committee members, or recommendation letter writers. So we would love to know about you!

Working with students is always exciting to us and we are constantly looking for students who show strong self motivation and can be persistent during up-and-downs when tackling research problems. A technical background in EE/CS is a plus but is not a must. That said, there are more active projects that require students with a certain level of experience in programming, embedded system development, and circuit design. The best way to reach out is to send us your CV, along with ideas of one or a few projects you want to work on, with focuses on 1) why it is an important problem, 2) how previous research has addressed the problem, and 3) what new can be introduced by this project. This process gives us a sense of what you feel excited about, which would help the match-making.

HCI is a method, and it is also a discipline. The ultimate question HCI researchers aim to answer is how can we make computer technologies better for humans - users in their context of computing applications ranging from AR/VR, IoT, wearables, haptics, digital health, accessibility, and beyond. At HiLab, HCI means 1) we study human-related signals, 2) we focus on technologies that have user applications, and 3) we build systems with users in the loop, and we evaluate these systems with real user populations. If you want to learn more about HCI, see Scott Klemmer's excellent course.

We primarily publish at ACM conferences and journals on Human-Computer Interaction. Below is a list of publication venues where we have published.

Sure! Though we enjoy meeting new students, our time could be limited and should be primarily focused on conducting research. If you want to schedule a meeting with a team member, please first send an email with your name, institution, the purpose of the meeting, potential time slots, and other information you want to share. Lab members could have tight schedules so please excuse us if your email is not responded timely.

Be nice and be kind. That's what we expect all lab members to be. UCLA ECE has a wide spectrum of research focuses, from AI/ML and quantum computing, to millimeter-wave and photon detection systems, which requires each member of the community to take personal interests out of calculation when evaluating each other's research contribution. A great exemplar set of lab values around Ethics, Diversity, and Community can be found on Jennifer Mankoff's Make4all lab webpage. We also practice the reasonable person principle and follow UCLA's Standards of Ethical Conduct in the lab.

HiLab is part of the ECE department in UCLA's Samueli School of Engineering. This website for the lab is actively being developed at the moment. So, stay tuned! There is more information on the lab space and resources to come. To contact the lab, please email yangzhang@ucla.edu.

Human-Centered Computing & Intelligent Sensing Lab 420 Westwood Plaza

Los Angeles, CA 90095

Email: yangzhang@ucla.edu